We're excited to announce the addition of NVIDIA GPU support in LayerOps! This major update brings powerful GPU capabilities to your services, enabling you to run GPU-intensive workloads like AI/ML applications and Ollama services.

New Features

External GPU Instance Support

You can now attach any external machine equipped with NVIDIA GPUs to your LayerOps infrastructure. This feature supports Ubuntu-based systems that have NVIDIA drivers properly installed, making it easy to integrate your existing GPU-enabled hardware.

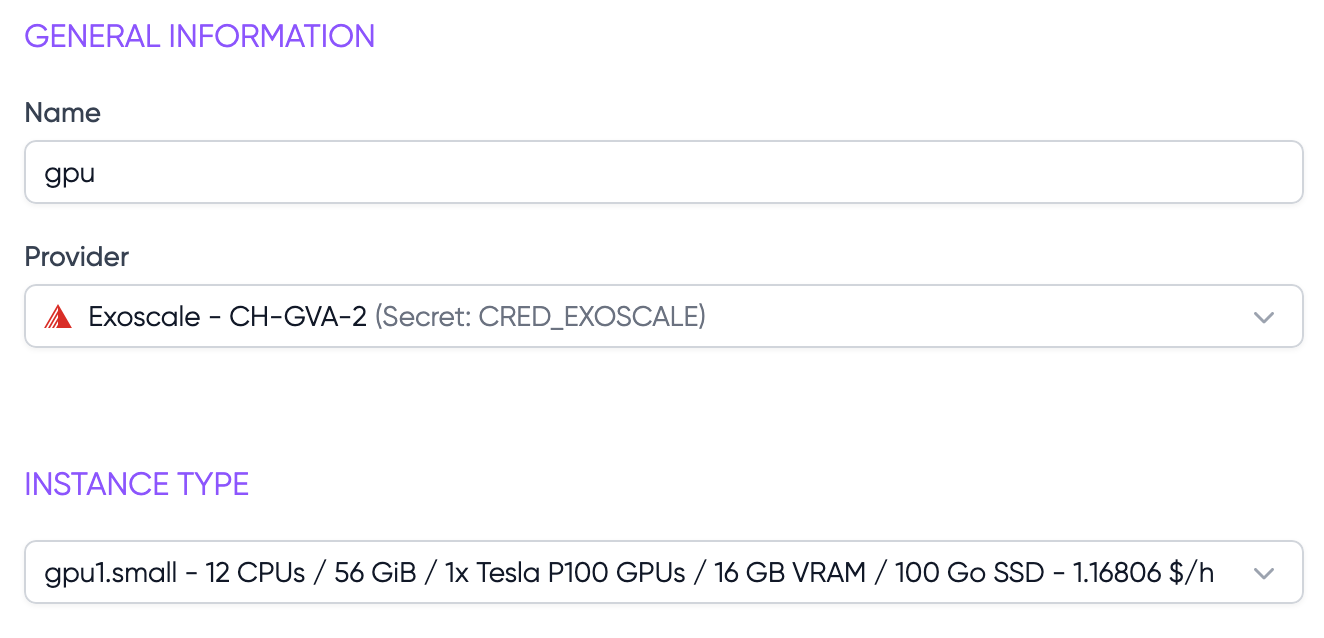

GPU-Compatible Cloud Provider Integration

LayerOps now supports deploying GPU-enabled instances through your configured cloud providers. This integration allows you to automatically scale your GPU workloads based on demand, with support for various GPU-compatible instance types from major cloud providers.

GPU Service Deployment

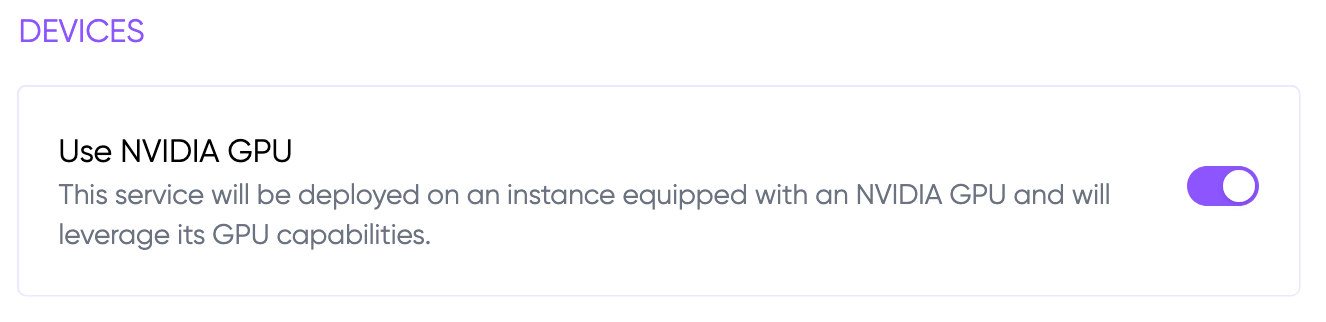

Configuring services to use NVIDIA GPUs is now straightforward.

Simply enable GPU capabilities in the "Devices" section of your service configuration, and LayerOps will automatically deploy your service to GPU-enabled instances.

This seamless integration ensures your GPU-intensive workloads run on the appropriate infrastructure without any additional configuration.

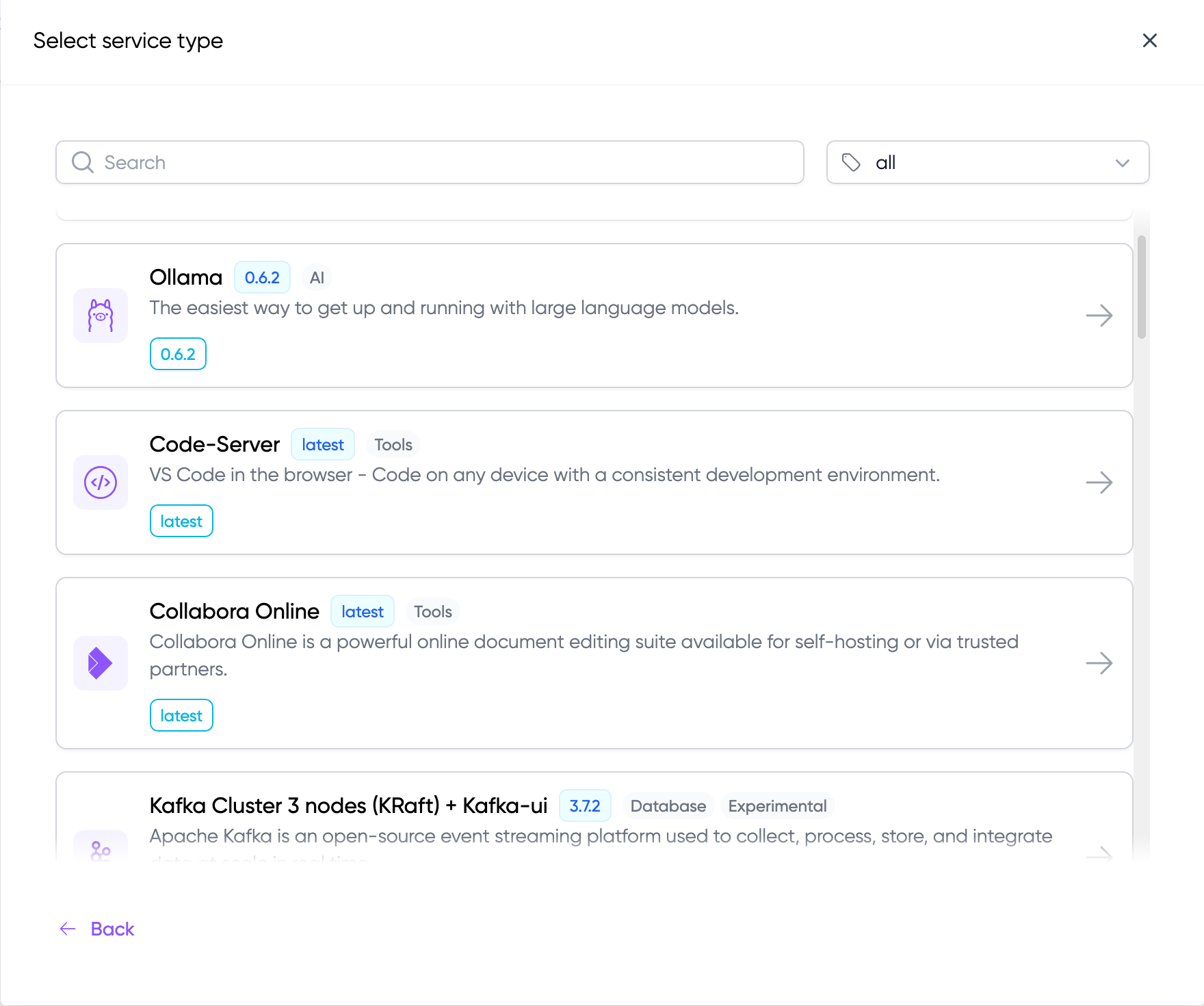

Ollama in Marketplace

Ollama is now available in the LayerOps Marketplace, enabling quick deployment of Large Language Models (LLMs). Combined with our new GPU support, you can efficiently run these models on your GPU-enabled infrastructure.

Key features include:

- One-click deployment of Ollama with GPU support

- Automatic resource allocation and scaling

- Seamless integration with LayerOps monitoring and logging